Archives

Click here to see a list of previous articles.

Click the image to see larger version

Where Did We Come From and Where are We Going? -- Part 1 of 3

When you turn on a computer these days, it is expected that the interface will, somehow, just be intuitive. We see icons that represent parts of the functions of the operating system we're using or certain programs we run. It's all pretty simple once you sit down and investigate the various areas. "Control Panel" holds an array of icons that lead us to customise the interface or devices on the machine. "Recent Documents" lists out the documents you opened recently. There's an area to tell you what printer drivers you have installed. And, in the Windows world, there's even a whole lot of help by pressing the F1 key wherever you are.  But take yourself back a mere 15 years and you'd find yourself in a world of uncertainty. Heck, take yourself back 50 years and you would look at a computer the same way Homer Simpson looks at his nuclear plant control panel: with utter confusion. If you sat down at your fully-booted computer and saw the image to your right, what would be the first thing you do? Personally, I would type cd \ then dir. Then if I saw the directory of my favourite application, I would type cd lemmings then type lemmings. But that's just me.

But take yourself back a mere 15 years and you'd find yourself in a world of uncertainty. Heck, take yourself back 50 years and you would look at a computer the same way Homer Simpson looks at his nuclear plant control panel: with utter confusion. If you sat down at your fully-booted computer and saw the image to your right, what would be the first thing you do? Personally, I would type cd \ then dir. Then if I saw the directory of my favourite application, I would type cd lemmings then type lemmings. But that's just me.

Everything in the computer industry makes advances fairly quickly, with exception to the floppy drive, which is the world's #1 Most Tenacious Device. It just won't go away. Five years ago, the industry said, "The floppy drive is officially dead." As most of us know, that is far from the truth, even today. However, most of us who use, or have seen, the LCD monitors, or purchased a new computer to replace their old one, picked up a new printer -- of whatever variety -- or even bought a DVD RW drive know progress is inevitable. In fact, it has become expected.

So if everything has arrived to where it has today via progress, a) where are we going and b) where did we come from? To understand the former, we must know the latter. The history of computing goes a very long way back, centuries if you include the automated mechanical machines, but the first digital computer came in early 1945 under the name of ENIAC: the Electronic Numerical Integrator and Computer, seen to the left. Huge, isn't it? That's one computer system built to perform one task: calculation. What makes a computer digital is its ability to convert binary code into performance. If you say to your friend, "Pick up a newspaper for me, please." that is using language -- English -- for communication. If you want to say the same thing to a computer, you might say, "1001010110001010000 1110100100010101101110101 0100010101101001010". (Note: For the true geeks out there, that isn't real code, just an example of what it would look like) This is the kind of code that was first used to communicate with the ENIAC system, so if you fed that code into the Eniac, it would blink its lights back at you and nothing would happen, because it doesn't have arms or a wallet or a bank account and it doesn't go to the store to pick up anyone's newspaper. What it could do, however, was calculate a 30 second trajectory of a shell (as in, from a warship) in 20 seconds. A marvel of modern technology in the '40s! The only thing is, it took 2 days to input the code. These days, there is a program made for that, and virtually any other thing you can think of, that takes about 10 seconds to input a code and renders a result instantly. In the mid-80s, watches with calculators were being sold for $15. Those watches had more calculating power than the ENIAC had, but without the ENIAC, we'd never have those watches. And without U.S. Navy Captain, Grace Hopper, we wouldn't have computer language. Rather than plug in the instructions one click at a time, Grace invented COBOL -- Common Business-Oriented Language. This allowed people to type instructions in a language they understood (not quite English, but not far removed from it, either). Thanks, Grace! You're peaches!

So if everything has arrived to where it has today via progress, a) where are we going and b) where did we come from? To understand the former, we must know the latter. The history of computing goes a very long way back, centuries if you include the automated mechanical machines, but the first digital computer came in early 1945 under the name of ENIAC: the Electronic Numerical Integrator and Computer, seen to the left. Huge, isn't it? That's one computer system built to perform one task: calculation. What makes a computer digital is its ability to convert binary code into performance. If you say to your friend, "Pick up a newspaper for me, please." that is using language -- English -- for communication. If you want to say the same thing to a computer, you might say, "1001010110001010000 1110100100010101101110101 0100010101101001010". (Note: For the true geeks out there, that isn't real code, just an example of what it would look like) This is the kind of code that was first used to communicate with the ENIAC system, so if you fed that code into the Eniac, it would blink its lights back at you and nothing would happen, because it doesn't have arms or a wallet or a bank account and it doesn't go to the store to pick up anyone's newspaper. What it could do, however, was calculate a 30 second trajectory of a shell (as in, from a warship) in 20 seconds. A marvel of modern technology in the '40s! The only thing is, it took 2 days to input the code. These days, there is a program made for that, and virtually any other thing you can think of, that takes about 10 seconds to input a code and renders a result instantly. In the mid-80s, watches with calculators were being sold for $15. Those watches had more calculating power than the ENIAC had, but without the ENIAC, we'd never have those watches. And without U.S. Navy Captain, Grace Hopper, we wouldn't have computer language. Rather than plug in the instructions one click at a time, Grace invented COBOL -- Common Business-Oriented Language. This allowed people to type instructions in a language they understood (not quite English, but not far removed from it, either). Thanks, Grace! You're peaches!

The ENIAC relied on serious hardware: lots and lots of vacuum tubes and relays. Sporting one of these babies in your office was not only ostentatious, it was noisy!  A couple of decades went by and we saw the advent of UNIVAC, which predicted Eisenhower to win the 1952 presidential race in the United States of America, and then miniaturisation started. The transistor -- which can be thanked for making radios so portable in the 60s -- was created and replaced those huge vacuum tubes that created so much heat and took up so much space. Then the transistor was reduced in size by changing the material that transistors were made from, as seen on the right, to silicon. Looking at the chip on the right, every one of those gold dots represents a transistor. If silicon rings a bell to you, it should: Silicon Valley in California, U.S.A is where so much of the activity in the computer industry has come from in the past 30 years. So you might ask: Does this chip do everything in a computer?

A couple of decades went by and we saw the advent of UNIVAC, which predicted Eisenhower to win the 1952 presidential race in the United States of America, and then miniaturisation started. The transistor -- which can be thanked for making radios so portable in the 60s -- was created and replaced those huge vacuum tubes that created so much heat and took up so much space. Then the transistor was reduced in size by changing the material that transistors were made from, as seen on the right, to silicon. Looking at the chip on the right, every one of those gold dots represents a transistor. If silicon rings a bell to you, it should: Silicon Valley in California, U.S.A is where so much of the activity in the computer industry has come from in the past 30 years. So you might ask: Does this chip do everything in a computer?  Like the pieces of a building, everything has its place and there are many parts that do the job, but the transistors, which reside in chips, are what help do the calculations of the things we want. What we needed to make a personal computer was for someone to make a little box that would not fill up the entire basement and that allowed us, to input the data.

Like the pieces of a building, everything has its place and there are many parts that do the job, but the transistors, which reside in chips, are what help do the calculations of the things we want. What we needed to make a personal computer was for someone to make a little box that would not fill up the entire basement and that allowed us, to input the data.

In 1975, Ed Roberts created the Altair, a small box that you could order from a magazine and when you unpacked it from the box, you had to assemble it yourself! Click on the image to the left to see a larger picture of the Altair. In fact, he estimated he could sell 800 of these units in a year, which was a pretty wild-eyed expectation at the time. A month after he submitted the ad in an electronics magazine, he was selling 250 per day. Altogether, he sold 40,000 units in 3 years. Up to this point, the Intel 8080, the processor that drove the Altair, was used for things like traffic lights and calculators. Traffic lights? But what about computers? It wasn't Intel who came up with the idea to use their chips for computers; it was computer hobbyists. So when you finally put it together, you could attach a monitor and keyboard and start using it, right? No. In fact, you couldn't attach anything to it. It was just a box with lights. What you could do, though, was click a bunch of buttons. There was one row of buttons you used to give instructions, another button to input them, then back to the same row for more instructions. When you completed your instructions and told the computer to run them, some lights turned on. Success! Isn't computing fun?  It was for a lot of people -- a lot of nerds. These nerds often gathered in groups like Homebrew Computer Club and included the likes of Paul Allen and Bill Gates, who went on to form a company called Microsoft -- short for Micro-computer Software. Paul Allen was the guy who wrote a program for the Altair and fed the program to the box via paper tape (attached to a controller inside the box). This was the first time someone did not have to labouriously click in the code. The program worked and everyone was excited. So excited, in fact, that by the end of 1975, there were dozens of micro-computer companies (remember: Back then, "computers" were typically enormous, so "micro-computers" was an apt name for the Altair and others of its ilk).

It was for a lot of people -- a lot of nerds. These nerds often gathered in groups like Homebrew Computer Club and included the likes of Paul Allen and Bill Gates, who went on to form a company called Microsoft -- short for Micro-computer Software. Paul Allen was the guy who wrote a program for the Altair and fed the program to the box via paper tape (attached to a controller inside the box). This was the first time someone did not have to labouriously click in the code. The program worked and everyone was excited. So excited, in fact, that by the end of 1975, there were dozens of micro-computer companies (remember: Back then, "computers" were typically enormous, so "micro-computers" was an apt name for the Altair and others of its ilk).

Bill Gates -- bottom far left -- and Paul Allen -- bottom far right -- with

the rest of the Microsoft employees in the mid-70s.

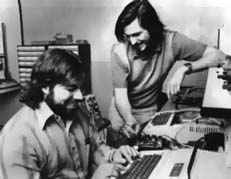

Two other enthusiasts who belonged to this club were named Steve Jobs and Steve Wozniak. Some people may have heard of these two, but for those who haven't, they created Apple Computers. It was Ed Roberts -- the Altair inventor, who created the concept of a personal computer, but these two Steves created the personal computer as we know it. Steve Wozniak had the marvelous brain that created the Apple I and Apple II. He made everything that was previously big -- like the floppy drive controller -- to something that was very small. Something you would even respect today by looking at it.  It was Steve Jobs, who was a technical wiz, himself, who was able to see a future for this sort of computer for many people at home. They sold hundreds of the Apple I, which consisted of computer parts built on to a wood frame. Yes: Wood. Click here to see the Apple 1 in all its glory. Jobs thought at the time, "For every one of these guys who love to take our machine and get their own parts to build on top of it, there are probably a thousand people who would like to use the machine for its programming capabilities, just like I did with the mainframes when I was 10 years old." With this in mind, he set out to find venture capital. He did, pined the Apple II be something compact enough to fit on a desk and the Apple II became an incredible success. And this was a mere two years after the Altair came onto the scene. Click here to see the commercially successful Apple II.

It was Steve Jobs, who was a technical wiz, himself, who was able to see a future for this sort of computer for many people at home. They sold hundreds of the Apple I, which consisted of computer parts built on to a wood frame. Yes: Wood. Click here to see the Apple 1 in all its glory. Jobs thought at the time, "For every one of these guys who love to take our machine and get their own parts to build on top of it, there are probably a thousand people who would like to use the machine for its programming capabilities, just like I did with the mainframes when I was 10 years old." With this in mind, he set out to find venture capital. He did, pined the Apple II be something compact enough to fit on a desk and the Apple II became an incredible success. And this was a mere two years after the Altair came onto the scene. Click here to see the commercially successful Apple II.

But without respectable applications, the nerd-factor goes so far. Douglas Adams, writer of Hitchhiker's Guide to the Galaxy, described a nerd as, "Someone who uses a telephone for the purpose of talking about telephones. Therefore, a computer nerd is someone who uses computers for the purpose of using computers." This sort of niche would go a certain distance with the Apple II -- and it did; VERY far -- but what it needed to gain absolute mainstream crazy was an application people would go crazy over. They'd hear about the application and HAVE to buy the Apple to use the application. In 1979, Dan Bricklin and Bob Frankston invented VisiCalc - Visible Calculator. It was the first spreadsheet ever invented for any computer and, as anyone who uses spreadsheets will tell you: without a spreadsheet, everything they do with them would take weeks or months longer with an enormous amount of more work if even one mistake was made. Sadly, they never patented the program. They were just inventing it for the sake of helping people. With the advent of the spreadsheet on the Apple, a craze came over businesses. Accountants could figure out how to put in questionable numbers in one column to make the long-term results in another column come out in their favour. "Look, boss! The computer says so." Why would you question the results of a computer?

With Steve Jobs on the front pages of Time and Steve Wozniak growing richer than rich, and everyone reaping rewards for buying all things Apple, everything seemed pretty comfortable for everyone. But not everyone was happy. A company who had been making large computers for decades started to take notice. This company was a name that was synonymous with computers: IBM. They saw a market becoming huge and wanted a piece of the action. Next week, you'll see what they did and how.

If you'd like to stop receiving this newsletter, click on the unsubscribe button below (it will bring up an e-mail to me) and just click send. Conversely, if you think someone else could benefit from receiving this, feel free to forward this mail to them. If you don't receive this currently and would like to subscribe, click on the subscribe button below and click on send.

If you have any comments, questions or concerns about this newsletter, feel free to e-mail me at sean@beggs.ca.